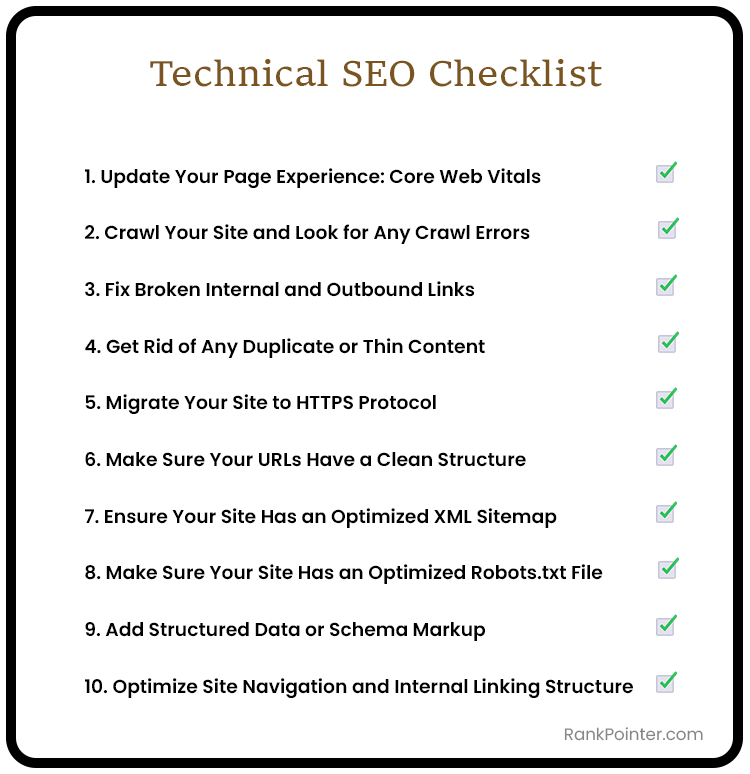

Even with the most exceptional content, if search engines struggle to crawl and index it, its value remains hidden from potential readers. Technical SEO includes the optimization of technical aspects of a website to ensure search engines can efficiently find and understand its content. Below is a technical SEO checklist for beginners, offering a starting point for optimization efforts.

Technical SEO Audit Checklist

Check out the technical search engine optimization checklist.

1. Update Your Page Experience: Core Web Vitals

Google’s recent page experience signals merge Core Web Vitals with existing search signals, encompassing mobile-friendliness, safe browsing, HTTPS security, and intrusive interstitial guidelines. Core Web Vitals consist of three crucial factors:

- First Input Delay (FID): FID gauges the time taken for a user’s first interaction with the page. A good user experience demands an FID of less than 100 ms.

- Largest Contentful Paint (LCP): LCP assesses the loading performance of the most significant content element on the screen. Optimal LCP completion occurs within 2.5 seconds.

- Cumulative Layout Shift (CLS): CLS evaluates the visual stability of elements on the screen. Websites should aim for a CLS of less than 0.1 seconds.

Various tools, notably Google PageSpeed Insights, aid in enhancing site speed and Core Web Vitals. Key optimizations to boost website speed include:

- Implementing lazy-loading for non-critical images

- Optimizing image formats for improved browser rendering

- Enhancing JavaScript performance

2. Crawl Your Site and Look for Any Crawl Errors

Ensuring your site is free from crawl errors is paramount for optimal performance. Crawl errors occur when a search engine attempts to access a page on your website but encounters difficulties.

Utilize tools like Screaming Frog or other online website crawl tools to conduct a thorough analysis. After crawling the site, meticulously inspect for any crawl errors. Additionally, leverage Google Search Console to verify and rectify issues.

When scanning for crawl errors, ensure to:

- a) Properly implement all redirects using 301 redirects.

- b) Review any 4xx and 5xx error pages to determine redirection destinations.

3. Fix Broken Internal and Outbound Links

A flawed link structure detrimentally impacts both user experience and search engine optimization. It’s frustrating for users to encounter non-functional links on your website. Consider the following factors when addressing broken links:

- Links redirecting with 301 or 302 status codes

- Links leading to 4XX error pages

- Orphaned pages lacking any inbound links

- Overly complex internal linking structures

To remedy broken links, update target URLs or remove non-existent links entirely.

4. Get Rid of Any Duplicate or Thin Content

Eliminating duplicate or thin content is essential for maintaining a high-quality website. Duplicate content can arise from various sources, such as faceted navigation, multiple live versions of the site, or copied content.

It’s crucial to ensure that only one version of your site is indexed by search engines. For instance, search engines perceive the following domains as distinct websites:

- https://www.abc.com

- https://abc.com

- http://www.abc.com

- https://abc.com

To address duplicate content, consider the following strategies:

- Set up 301 redirects to direct all versions to the preferred URL (e.g., https://www.abc.com).

- Implement no-index or canonical tags on duplicate pages to instruct search engines on the primary version.

- Specify the preferred domain in Google Search Console to consolidate indexing efforts.

- Configure parameter handling in Google Search Console to manage URL variations effectively.

- Delete duplicate content whenever possible to streamline the website’s content structure.

Learn More: Duplicate Content and SEO: A Comprehensive Guide

5. Migrate Your Site to HTTPS Protocol

Since Google’s announcement in 2014 that HTTPS protocol is a ranking factor, migrating your site to HTTPS has become necessary.

HTTPS encryption safeguards your visitors’ data, mitigating the risk of hacking or data breaches. If your site is still operating on HTTP, it’s time to initiate the switch to HTTPS for enhanced security and compliance with modern web standards.

Related Article: The Importance of Cybersecurity in SEO

6. Make Sure Your URLs Have a Clean Structure

Google emphasizes that a website’s URL structure should prioritize simplicity. Complex URLs can hinder crawler efficiency, potentially leading to incomplete indexing of your site’s content.

Problematic URLs often arise from:

- Sorting parameters: Some e-commerce sites offer multiple sorting options for the same items, resulting in excessive URLs. For example: http://www.example.com/results?search_type=search_videos&search_query=tpb&search_sort=relevance&search_category=25

- Irrelevant parameters: Unnecessary parameters like referral codes can clutter URLs, complicating navigation. For example: http://www.example.com/search/noheaders?click=6EE2BF1AF6A3D705D5561B7C3564D9C2&clickPage=OPD+Product+Page&cat=79

Trimming unnecessary parameters whenever feasible helps streamline URLs, enhancing both user experience and crawler efficiency.

7. Ensure Your Site Has an Optimized XML Sitemap

XML sitemaps are instrumental in communicating your site’s structure to search engines and guiding what to include in the Search Engine Results Pages (SERP).

XML sitemap should include:

- New content additions, including recent blog posts, products, etc.

- Only URLs returning a 200 status code.

- Limiting the sitemap to a maximum of 50,000 URLs. If exceeding this limit, consider employing multiple XML sitemaps to optimize crawl budget allocation.

XML sitemap should exclude:

- URLs containing parameters

- URLs undergoing 301 redirection or containing canonical or no-index tags

- URLs returning 4xx or 5xx status codes

- Duplicate content

8. Make Sure Your Site Has an Optimized Robots.txt File

Robots.txt files serve as directives for search engine robots, instructing them on how to crawl your website. With every website having a “crawl budget,” ensuring that only essential pages are indexed becomes crucial.

On the other hand, it’s vital to avoid blocking content you want indexed. Here are examples of URLs to disallow in your robots.txt file:

- Temporary files

- Admin pages

- Cart & checkout pages

- Search-related pages

- URLs containing parameters

Additionally, include the location of the XML sitemap in the robots.txt file. Utilize Google’s robots.txt tester to verify the file’s correct functionality.

9. Add Structured Data or Schema Markup

Structured data enriches page content by providing information about its meaning and context to search engines, notably Google. This enhances organic listings on the search engine results pages.

Schema markup, one of the most prevalent types of structured data, offers various schemas for structuring data related to people, places, organizations, local businesses, reviews, and more.

To implement structured data effectively, consider using online schema markup generators. Google’s Structured Data Testing Tool is particularly useful for creating schema markup tailored to your website’s needs.

Useful Article: Structured Data and Schema Markup: Boosting SEO Visibility

10. Optimize Site Navigation and Internal Linking Structure

Efficient site navigation and a well-structured internal linking system are essential for enhancing user experience and search engine discoverability. Here’s how to optimize them:

- Ensure intuitive navigation menus that guide users seamlessly through your website’s content.

- Maintain consistency in navigation across all pages for easy exploration.

- Organize content hierarchically, with important pages prioritized higher in the navigation structure.

- Use descriptive anchor text for internal links to provide context and improve user understanding.

- Implement breadcrumbs to help users understand their location within the site’s hierarchy and facilitate navigation.

- Ensure all pages are linked to from somewhere within the site to prevent orphaned pages that are inaccessible to users and search engines.

- Develop a robust internal linking strategy to connect relevant pages, distribute link equity, and improve crawlability.

By optimizing site navigation and internal linking, you enhance user experience, improve search engine visibility, and maximize the potential of your website.

Technical SEO Checklist Template

Wrapping Up: Technical SEO Checklist

Streamlining technical SEO elements is important for enhancing website performance and search engine visibility. By following this comprehensive checklist, you can ensure your website is set up for success.